Teams of experts and citizen scientists help build image classifiers from satellite imagery of Earth to spot signs of natural disasters.

by SCOTT MARTIN

When freak lightning ignited massive wildfires across Northern California last year, it also sparked efforts from data scientists to improve predictions for blazes.

One effort came from SpaceML, an initiative of the Frontier Development Lab, which is an AI research lab for NASA in partnership with the SETI Institute. Dedicated to open-source research, the SpaceML developer community is creating image recognition models to help advance the study of natural disaster risks, including wildfires.

SpaceML uses accelerated computing on petabytes of data for the study of Earth and space sciences, with the goal of advancing projects for NASA researchers. It brings together data scientists and volunteer citizen scientists on projects that tap into the NASA Earth Observing System Data and Information System data. The satellite information came from recorded images of Earth — 197 million square miles — daily over 20 years, providing 40 petabytes of unlabeled data.

“We are lucky to be living in an age where such an unprecedented amount of data is available. It’s like a gold mine, and all we need to build are the shovels to tap its full potential,” said Anirudh Koul, machine learning lead and mentor at SpaceML.

Stoked to Make Difference

Koul, whose day job is a data scientist at Pinterest, said the California wildfires damaged areas near his home last fall. The San Jose resident and avid hiker said they scorched some of his favorite hiking spots at nearby Mount Hamilton. His first impulse was to join as a volunteer firefighter, but instead he realized his biggest contribution could be through lending his data science chops.

Koul enjoys work that helps others. Before volunteering at SpaceML, he led AI and research efforts at startup Aira, which uses augmented reality glasses to dictate for the blind what’s in front of them with image identification paired to natural language processing.

Aira, a member of the NVIDIA Inception accelerator program for startups in AI and data science, was acquired last year.

Inclusive Interdisciplinary Research

The work at SpaceML combines volunteers without backgrounds in AI with tech industry professionals as mentors on projects. Their goal is to build image classifiers from satellite imagery of Earth to spot signs of natural disasters.

Groups take on three-week projects that can examine everything from wildfires and hurricanes to floods and oil spills. They meet monthly with scientists from NASA with domain expertise in sciences for evaluations.

Contributors to SpaceML range from high school students to graduate students and beyond. The work has included participants from Nigeria, Mexico, Korea and Germany and Singapore.

SpaceML’s team members for this project include Rudy Venguswamy, Tarun Narayanan, Ajay Krishnan and Jeanessa Patterson. The mentors are Koul, Meher Kasam and Siddha Ganju, a data scientist at NVIDIA.

Assembling a SpaceML Toolkit

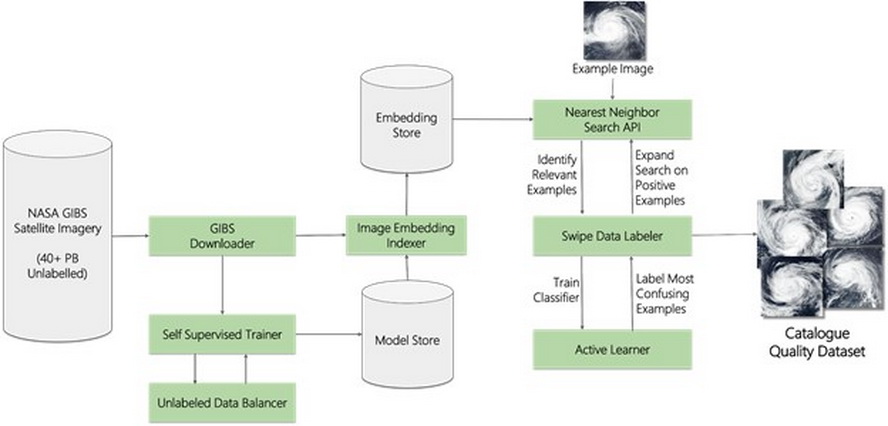

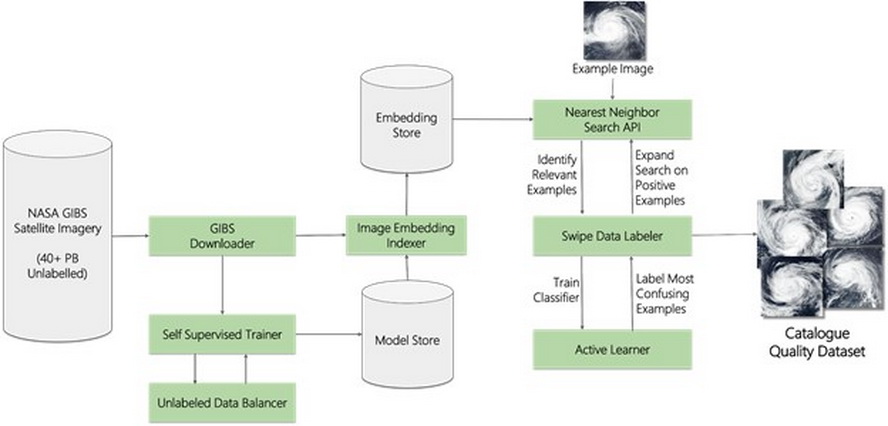

SpaceML provides a collection of machine learning tools. Groups use it to work on such tasks as self-supervised learning using SimCLR, multi-resolution image search, and data labeling, among other tasks. Ease of use is key to the suite of tools.

Among their pipeline of model-building tools, SpaceML contributors rely on NVIDIA DALI for fast preprocessing of data. DALI helps with unstructured data unfit to feed directly into convolutional neural networks to develop classifiers.

“Using DALI we were able to do this relatively quickly,” said Venguswamy.

Findings from SpaceML were published at the Committee on Space Research (COSPAR) so that researchers can replicate their formula.

Classifiers for Big Data

The group developed Curator to train classifiers with a human in the loop, requiring fewer labeled examples because of its self-supervised learning. Curator’s interface is like Tinder, explains Koul, so that novices can swipe left on rejected examples of images for their classifiers or swipe right for those that will be used in the training pipeline.

The process allows them to quickly collect a small set of labeled images and use that against the GIBS Worldview set of the satellite images to find every image in the world that’s a match, creating a massive dataset for further scientific research.

“The idea of this entire pipeline was that we can train a self-supervised learning model against the entire Earth, which is a lot of data,” said Venguswamy.

The CNNs are run on instances of NVIDIA GPUs in the cloud.

To learn more about SpaceML, check out these speaker sessions at GTC 2021:

Space ML: Distributed Open-Source Research with Citizen-Scientists for Advancing Space Technology for NASA (GTC registration required to view)

Curator: A No-Code, Self-Supervised Learning and Active Labeling Tool to Create Labeled Image Datasets from Petabyte-Scale Imagery (GTC registration required to view)

The GTC keynote can be viewed on April 12 at 8:30 a.m. Pacific time and will be available for replay.

Photo credit: Emil Jarfelt, Unsplash

Teams of experts and citizen scientists help build image classifiers from satellite imagery of Earth to spot signs of natural disasters.

by SCOTT MARTIN

When freak lightning ignited massive wildfires across Northern California last year, it also sparked efforts from data scientists to improve predictions for blazes.

One effort came from SpaceML, an initiative of the Frontier Development Lab, which is an AI research lab for NASA in partnership with the SETI Institute. Dedicated to open-source research, the SpaceML developer community is creating image recognition models to help advance the study of natural disaster risks, including wildfires.

SpaceML uses accelerated computing on petabytes of data for the study of Earth and space sciences, with the goal of advancing projects for NASA researchers. It brings together data scientists and volunteer citizen scientists on projects that tap into the NASA Earth Observing System Data and Information System data. The satellite information came from recorded images of Earth — 197 million square miles — daily over 20 years, providing 40 petabytes of unlabeled data.

“We are lucky to be living in an age where such an unprecedented amount of data is available. It’s like a gold mine, and all we need to build are the shovels to tap its full potential,” said Anirudh Koul, machine learning lead and mentor at SpaceML.

Stoked to Make Difference

Koul, whose day job is a data scientist at Pinterest, said the California wildfires damaged areas near his home last fall. The San Jose resident and avid hiker said they scorched some of his favorite hiking spots at nearby Mount Hamilton. His first impulse was to join as a volunteer firefighter, but instead he realized his biggest contribution could be through lending his data science chops.

Koul enjoys work that helps others. Before volunteering at SpaceML, he led AI and research efforts at startup Aira, which uses augmented reality glasses to dictate for the blind what’s in front of them with image identification paired to natural language processing.

Aira, a member of the NVIDIA Inception accelerator program for startups in AI and data science, was acquired last year.

Inclusive Interdisciplinary Research

The work at SpaceML combines volunteers without backgrounds in AI with tech industry professionals as mentors on projects. Their goal is to build image classifiers from satellite imagery of Earth to spot signs of natural disasters.

Groups take on three-week projects that can examine everything from wildfires and hurricanes to floods and oil spills. They meet monthly with scientists from NASA with domain expertise in sciences for evaluations.

Contributors to SpaceML range from high school students to graduate students and beyond. The work has included participants from Nigeria, Mexico, Korea and Germany and Singapore.

SpaceML’s team members for this project include Rudy Venguswamy, Tarun Narayanan, Ajay Krishnan and Jeanessa Patterson. The mentors are Koul, Meher Kasam and Siddha Ganju, a data scientist at NVIDIA.

Assembling a SpaceML Toolkit

SpaceML provides a collection of machine learning tools. Groups use it to work on such tasks as self-supervised learning using SimCLR, multi-resolution image search, and data labeling, among other tasks. Ease of use is key to the suite of tools.

Among their pipeline of model-building tools, SpaceML contributors rely on NVIDIA DALI for fast preprocessing of data. DALI helps with unstructured data unfit to feed directly into convolutional neural networks to develop classifiers.

“Using DALI we were able to do this relatively quickly,” said Venguswamy.

Findings from SpaceML were published at the Committee on Space Research (COSPAR) so that researchers can replicate their formula.

Classifiers for Big Data

The group developed Curator to train classifiers with a human in the loop, requiring fewer labeled examples because of its self-supervised learning. Curator’s interface is like Tinder, explains Koul, so that novices can swipe left on rejected examples of images for their classifiers or swipe right for those that will be used in the training pipeline.

The process allows them to quickly collect a small set of labeled images and use that against the GIBS Worldview set of the satellite images to find every image in the world that’s a match, creating a massive dataset for further scientific research.

“The idea of this entire pipeline was that we can train a self-supervised learning model against the entire Earth, which is a lot of data,” said Venguswamy.

The CNNs are run on instances of NVIDIA GPUs in the cloud.

To learn more about SpaceML, check out these speaker sessions at GTC 2021:

Space ML: Distributed Open-Source Research with Citizen-Scientists for Advancing Space Technology for NASA (GTC registration required to view)

Curator: A No-Code, Self-Supervised Learning and Active Labeling Tool to Create Labeled Image Datasets from Petabyte-Scale Imagery (GTC registration required to view)

The GTC keynote can be viewed on April 12 at 8:30 a.m. Pacific time and will be available for replay.

Photo credit: Emil Jarfelt, Unsplash